Sensor data fusion: Camera & Radar

This was a team project done under supervision of Technische Hochschule Ingolstadt. The goal was to perform late-fusion on radar & camerea detections.

This was really fun to work on - got to learn about nitty-gritties of model training, evaluation and spatial fusion of detections. I would like to highlight a few sections here from the project.

Image Augmentation:

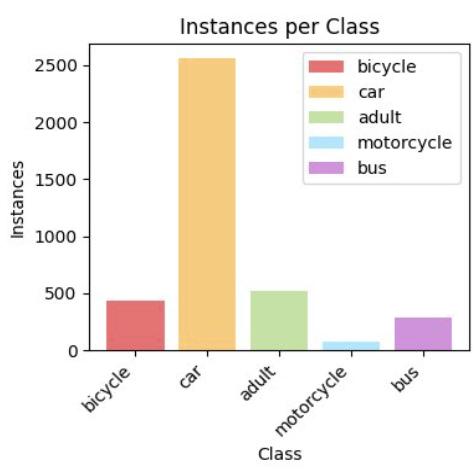

Primary problem when it comes to working on road user data is high levels of data imbalance among different classes, we were working with 6 road user classes with the following distribution.

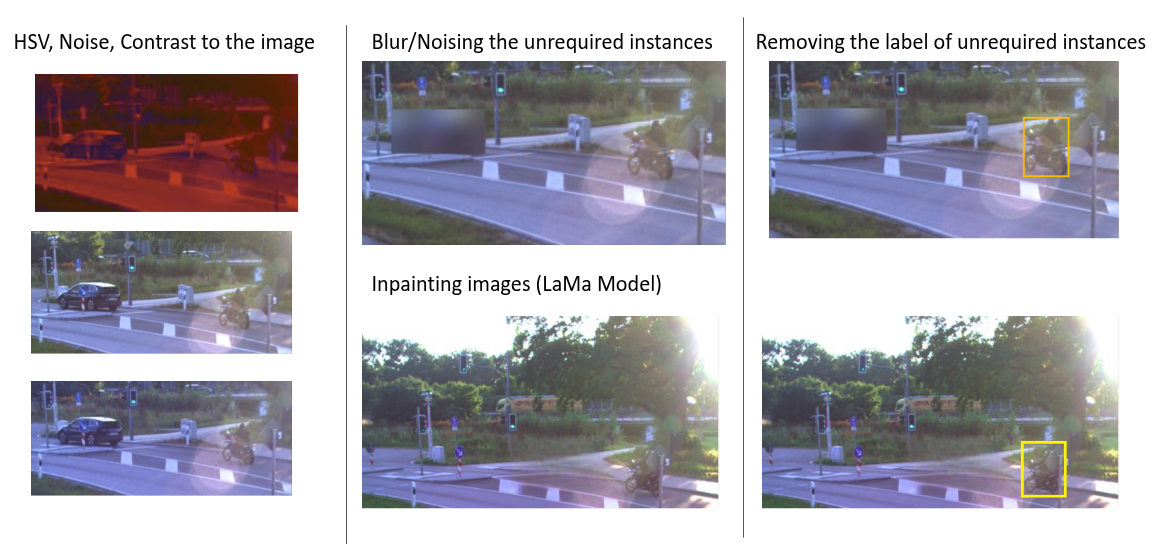

Tried a bunch of different techniques for image augmentation, one of the most interesting was Image Inpainting.

Model Fine-Tuning:

Used Ultralytics library along with YOLOv8-large for fine tuning on the INFRA-3DRC dataset

Sensor Fusion:

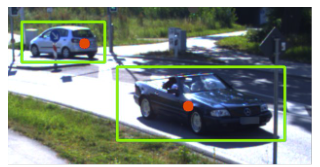

Performed spatial fusion of detection objects from two different sensors: Radar & Camera. As seen below, the box is detection result from the camera (YOLO v8) and the red dot represents the centroid of cluster (detection object from radar).